Can you introduce yourself in a few words?

David BOYREL, I have been working for AVSimulation since 2005, formerly OKTAL Paris.

I started as a service provider and then I joined the development team where I worked on SCANeR around many subjects in the field of 3D: the SCANeR visual rendering engine, virtual and mixed reality, lighting simulation, sensor modeling, and Cave simulators and virtual reality headsets.

I am now the TechLead of the Visual Sensors Unreal Terrain team.

What is a sensor? What is it used for on a vehicle?

A sensor is a device that transforms an observed physical quantity (a temperature for example) into an usable quantity (typically an electric voltage). Sensors are therefore electronic devices that are placed on the vehicle.

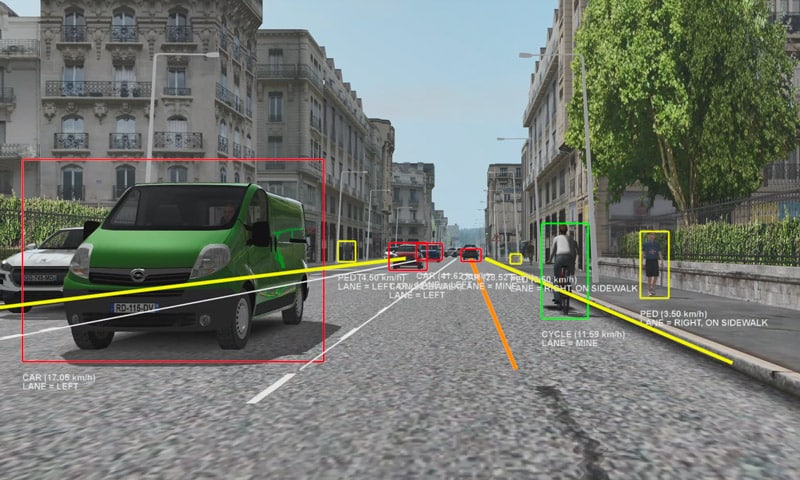

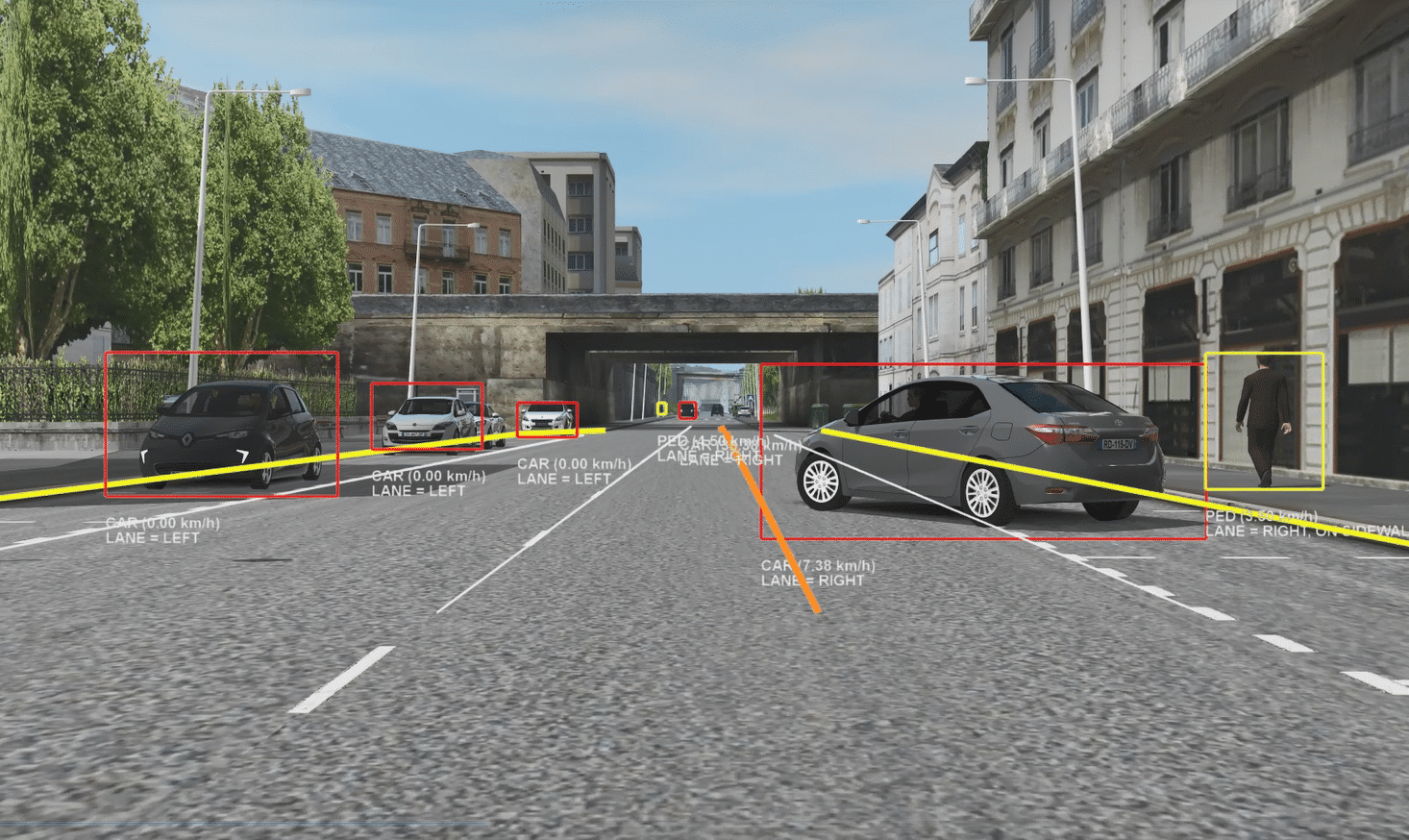

Their role is to detect and understand their surroundings by processing the signal they perceive (an image, a radar signal) to interpret it as part of the road environment: to locate signs; to know if there are other vehicles around; to locate road markings, etc. In other words, it no longer returns a simple electrical value but information. This is the case, for example, of a reversing camera which is able to “read” the signs and inform the car’s computer that the speed should be set to 80 km/h.

On vehicles, sensors are used to design ADAS. These make it possible to make vehicles more and more autonomous or to improve safety. Here are some examples: the reversing camera, lane change detection, blind spot detection, emergency stop systems, assisted driving in traffic jams, etc.

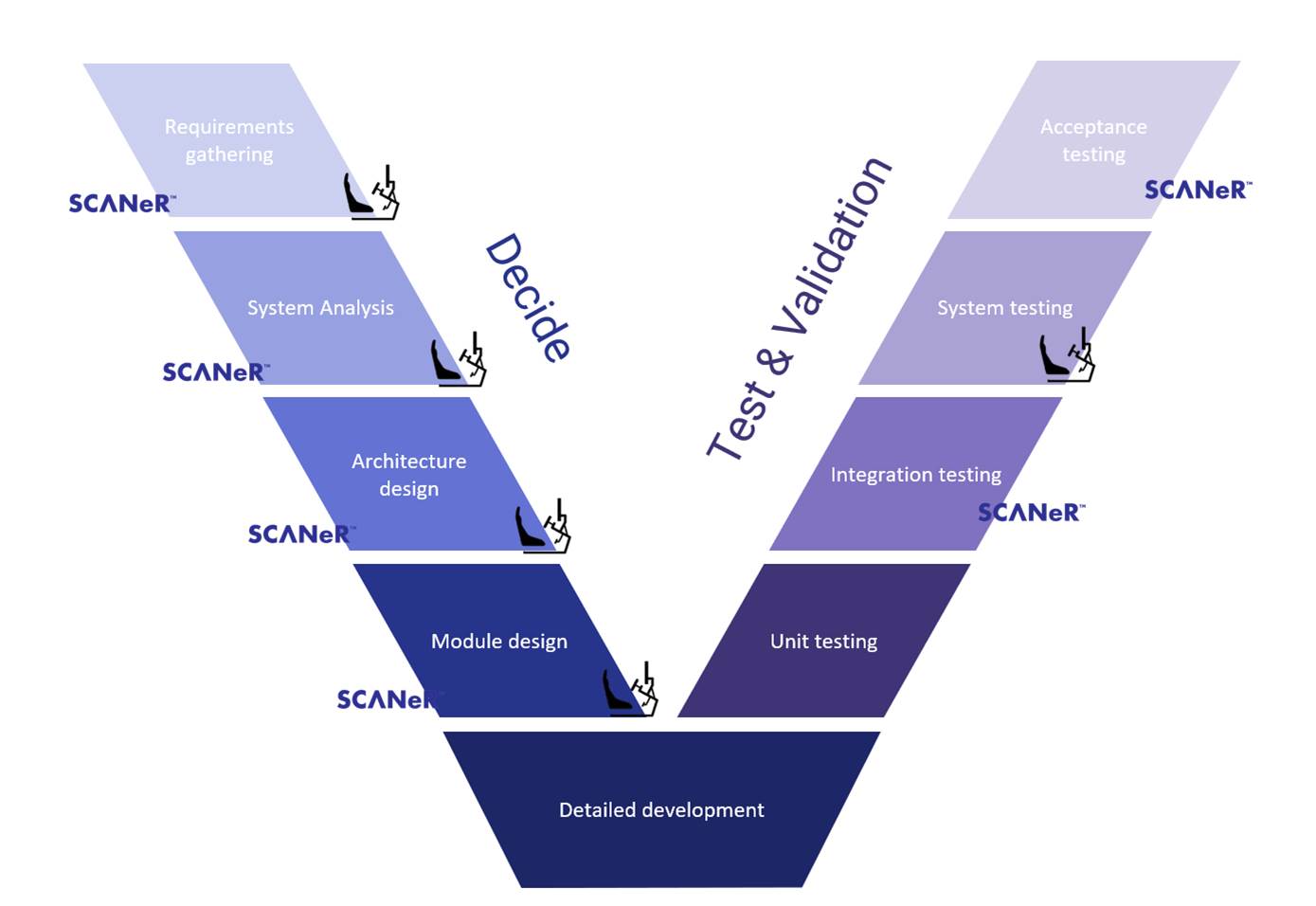

Today, the sensor allows to analyze the scene around the car and to make decisions in different situations. It is a system that allows us to migrate towards full autonomous driving, where autonomous driving models will be able to analyze more and more delicate situations, based on increasingly rich sensor data. We are therefore witnessing an exponential increase in the complexity of ADAS and their development. This is why simulation, which allows decisions to be made very early in the design cycle, has become obvious to everyone.

Is it possible to simulate sensors?

Absolutely, and this is one of the major assets of SCANeR.

The simulation allows to create specific situations (pedestrian crossing, emergency braking, line crossing, etc.) and to generate the detection information that a set of real sensors is able to provide in these situations. This information is sent to mathematical data fusion models, which will cross-reference and process the data provided by all the sensors in order to reconstruct as accurately as possible the situation.

To this end, the majority of the sensors we provide in SCANeR are so-called “functional” sensors, or so-called level L1 sensors: they simulate the detection information, but not the sensor technology.

Level L3 sensors, which take into account the sensor technology, are also available in SCANeR.

What is the purpose of functional sensors? Are they faultless?

Functional sensors are used to develop driving models. Engineers are interested in the information that the sensors return and that will feed the driver assistance system they are developing.. If I need to test the behavior of an ADAS I need my sensor to tell me that a pedestrian is crossing. I don’t need to know how the lenses focus the light on the camera CCD, nor do I need to know the logic of the camera program that detects the pedestrian in question.

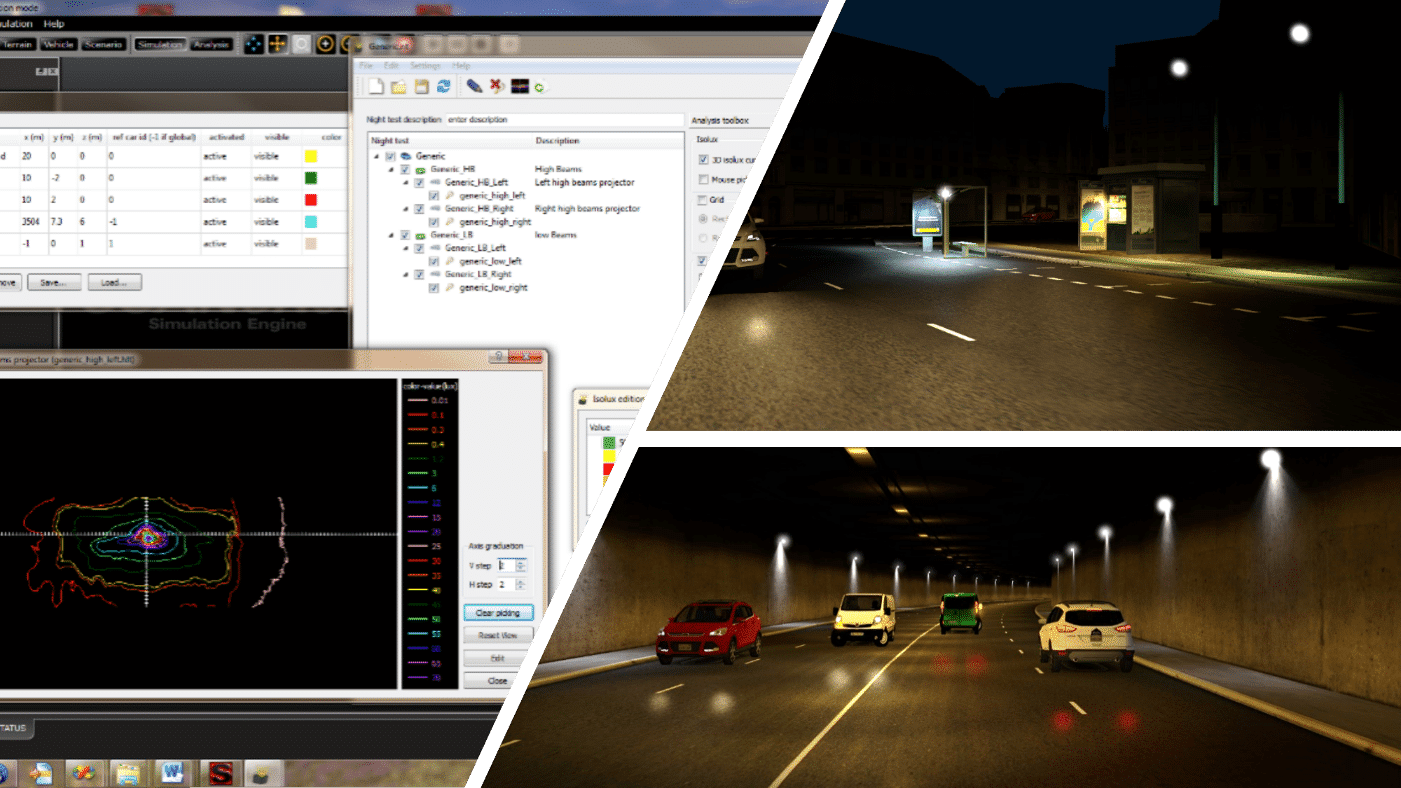

By using functional sensors and simulation to develop the driving models, the idea is to reduce as many actual physical tests as possible. These are expensive and sometimes dangerous. An example can help to understand the stakes. In the middle of summer, at 3:00pm, it is quite possible to simulate the London fog that we encounter at 6:00 in November. Also, as vehicle headlights are increasingly considered as ADAS, the return on investment of a Headlights simulator can be counted in months. Headlights can be tuned up 24/7. No need to send teams to the nordic countries to benefit from long nights. Finally, the engineers work in a very short loop around the simulator, which means that it is easy and quick to intervene on the code and logic of the latter. If necessary, it is easy to invite other teams to come and work on the sensors. Of course, everything I explain about projectors applies to other sensors: radar, lidar, camera…

In a very practical way, when a car manufacturer and equipment suppliers design a sensor, they will use it to retrieve information from the surrounding traffic and then design a driving model. This driving model will make the vehicle slow down, overtake, accelerate, etc. in a given situation.

With simulation, it is possible to recreate all these situations. Indeed, SCANeR knows and “draws” the world around the vehicle. It knows that at 50 meters a pedestrian is crossing outside the lane. It will therefore simulate what the sensor is able to detect so that the model that analyzes the information can make a decision.

If the decision taken is not good, the engineers can quickly intervene on the code and restart the simulation. At a more advanced stage, there will probably be thousands of scenarios to run. In this case, simulations are run at night locally or in the cloud and in the morning, engineers can focus on scenarios where the driving model does not meet the requirements: braking too late, accelerating too sharply…

SCANeR’s functional sensors are indeed faultless because we do not simulate their technology. Our goal is to avoid introducing errors due to the simulation that would not be present in the real sensor. The fact that in SCANeR we can completely parameterize the simulation, allows the sensor to detect exactly what is happening. We do not introduce errors via the implementation of the detection algorithm, which returns exactly what the simulation does.

If the user wishes to introduce errors, he does so by inserting statistical error models that he has previously parameterized. For example, positional perturbations are a type of error frequently introduced in SCANeR to alter the accuracy of the points on the various targets detected.

What types of sensors are available in SCANeR?

|

First of all, we have the ultrasonic sensors. The back-up alarm that beeps when approaching an obstacle is an example. |

|

Radar and camera sensors can detect moving targets or signs. For example, a radar will pick up a sign without being able to read what is on it. The camera will complete it by reading what is written on it. |

|

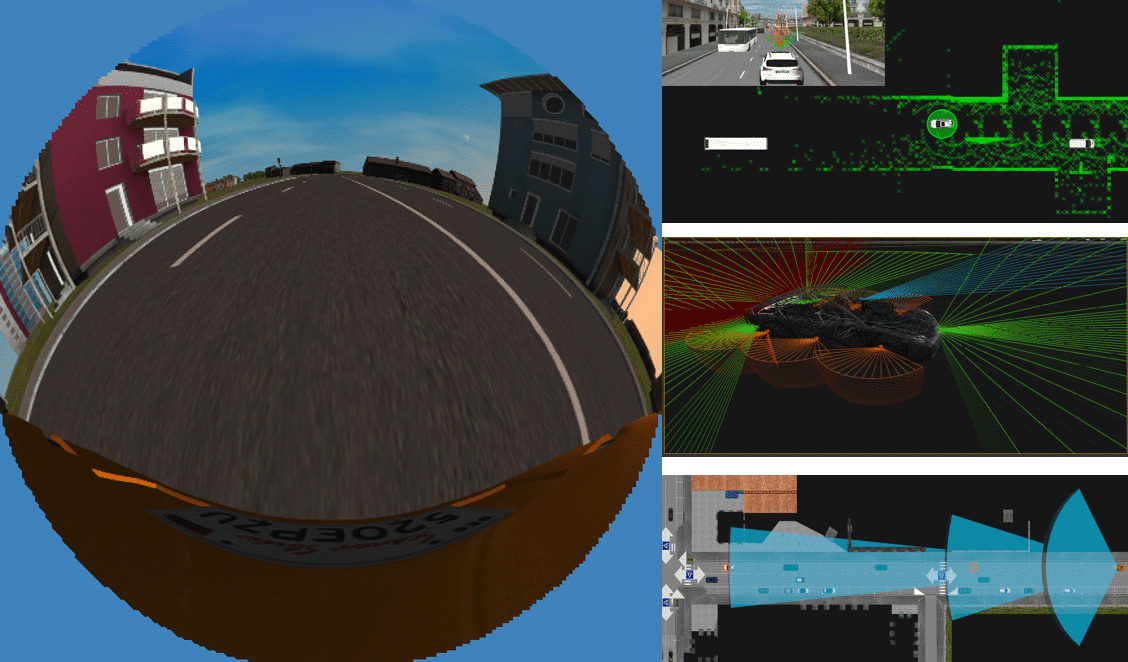

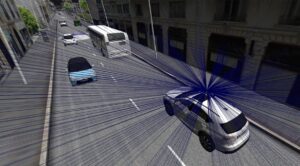

Then we have the lidar sensor, which is the acronym for light detection and ranging. It is a set of lasers that will generate a point cloud allowing to reconstitute a three-dimensional view of the environment surrounding the equipped vehicle. |

|

AVSimulation also proposes the lighting sensor, or luxmeter: it is able to evaluate the luminosity of a scene. |

|

Finally, the GPS, coupled with the Electronic Horizon, are sensors that detect trajectories. |

Each sensor has its specificities and no sensor can cover all the uses. Simulating several different sensors together allows us to merge the returned information and to develop more resilient ADAS more quickly.

How many functional sensors can you simulate at the same time?

No limit! More seriously, the SCANeR architecture is very modular and distributed in nature, so it is very easy to distribute the simulation of sensors on different processors. If the simulator or the simulation platform has several machines, the engineer distributes the computational load on them. The more hardware resources are available, the more sensors can be simulated in parallel. It is also a state of mind. SCANeR does not require heavy investment from the start. We understand that at the beginning it is about developing an emergency stop with a workstation and a sensor. However, as time goes by, the ADAS will become more sophisticated. SCANeR will support the team by allowing them to add compute nodes, sensors, etc

What do AVSimulation sensors have to offer our customers?

The first advantage of SCANeR is its open architecture and numerous APIs (programming interfaces) that give fusion models access to all simulation data.

The second advantage is the ease of coupling sensors with other aspects of the simulation. Everything is open, modular, accessible. This is a decisive argument for users. For example, the lighting simulation can be used to simulate LED arrays, systems that, for example, dim the light to avoid glare. This allows the driver to avoid switching manually from high to low beam. The sensor plays an essential role here because it will detect the target (the vehicle you are passing), and allow to lower the light only in the target area to avoid blinding it.

Can you confirm that the sensors use the GPU (Graphics Processing Unit) if it is present?

Indeed, the most demanding sensors use the GPU because it is essential to make a real-time 3D rendering and to evaluate the occlusions of the elements in the scene. As soon as there is a 3D scene, the GPU is used. Since version 2021.1, SCANeR benefits from a new 3D engine (UXD) which is based on Unreal technologies and platform. This is a great opportunity and it takes our sensor simulations to a new level (finer management of materials, camera effect, etc.). The Unreal platform will allow us to develop complementary sensors such as cameras with specific optics.

What is the difference between L1, L2 and L3 sensors?

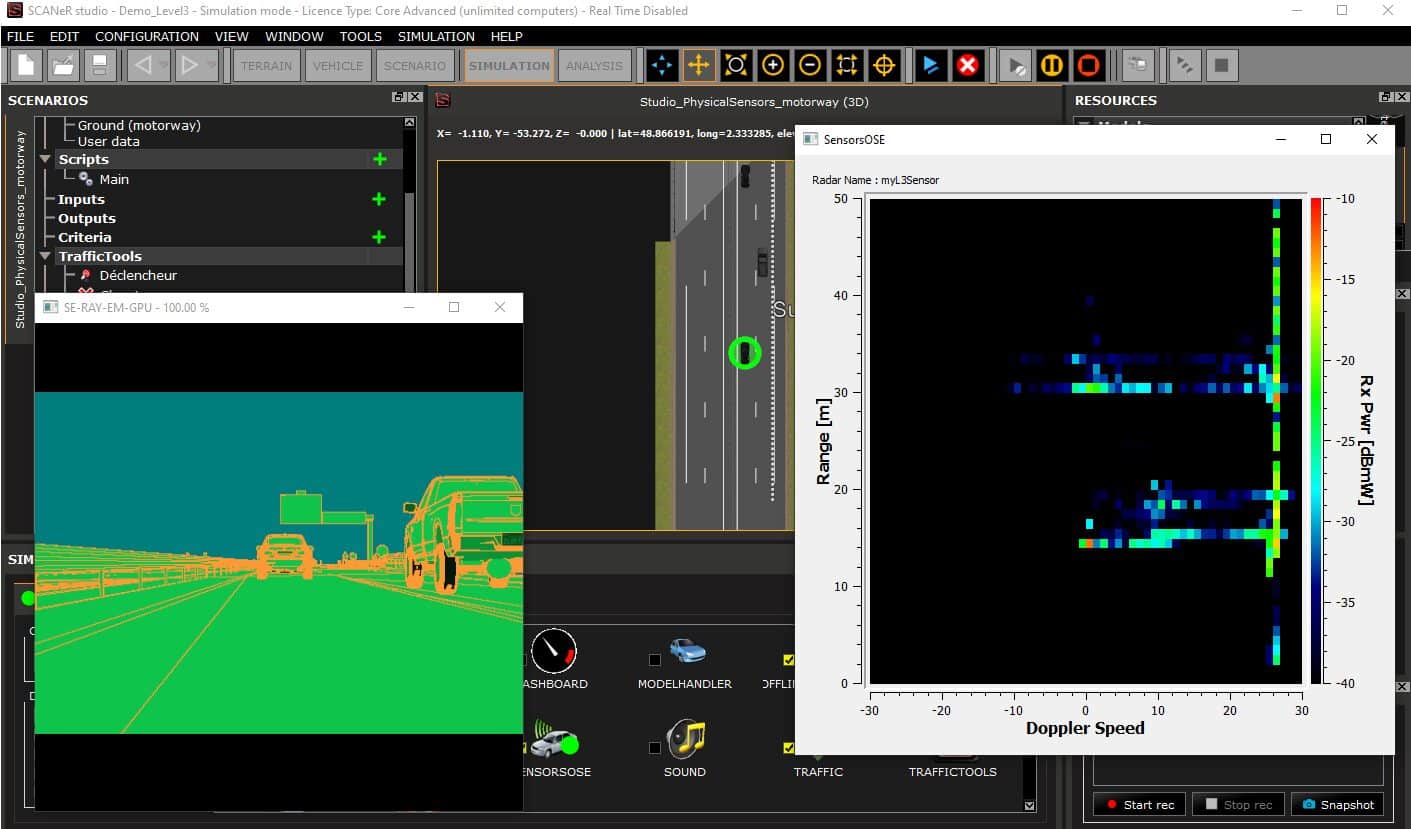

The levels L1, L2 and L3 represent the level of processing of the raw signal of the sensor: a L3 level sensor will provide the raw signal, not processed: a Doppler signal for a radar, an image for a camera. A L1 level sensor will provide data resulting from the processing of the raw signal, in order to detect vehicles, signs, lines, resulting from the analysis of the raw signal.

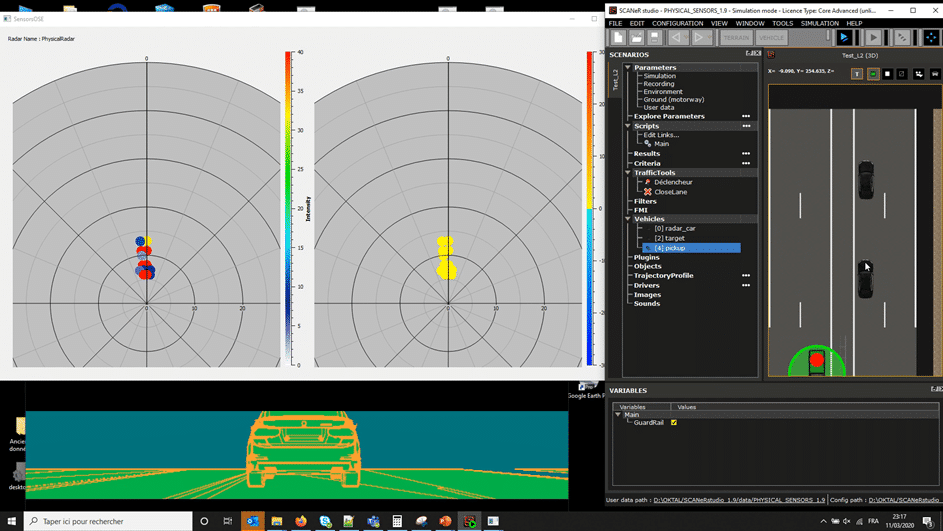

The L3 level sensors require a more physical modeling of the scene. For L3 level radars, we use the technologies of our partner company Oktal-SE.

OKTAL-SE, which is also part of the SOGECLAIR group, simulates the sensor technology. They use a physical description of the scene and the materials are described according to their reaction to certain stimuli (radar wave, light beam). In SCANeR, the description of our materials is purely visual. However, to simulate a radar sensor, we need a different description in the electromagnetic domain, so OKTAL SE is in charge of this task.

For example, for radar, we count 3 levels: L1, L2 and L3. Level 1 is translated by a purely functional sensor, where we send back processed information as output. Level 2 is the intermediate, it is a raw signal already processed where we will have intensity spots, in other words a grouping of points with a level of intensity of reflection of the signal. This grouping will alert the user that there is an obstacle, then the processing will allow to interpret this information and thus to know if it is a sign, a vehicle, a pedestrian or other. Level 3 is the raw signal, such as the Doppler signal.

[OKTAL-SE is a long-time partner, their simulated sensors are integrated in SCANeR].

In the L2, there is a radar and a lidar but there is no camera?

There is no intermediate level for cameras. The raw output of a camera is its image. There are no levels like for radar and lidar. On the one hand we can create L1 camera sensors that send detection information that lists the entities in the scene. On the other hand, it is also possible to simulate the raw image output of a camera using 3D rendering. SCANeR users can retrieve the raw image and analyze it by themselves, and then do their own detection.

Different types of effects can be applied on the image. Often, to do image detection, we use segmentation: flat colors are applied on different elements of the scene to teach an image analysis algorithm to detect, for example, a vehicle or lines.

A word of conclusion?

We are gradually migrating the entire 3D engine to the new UXD engine. This is a more powerful and very interesting tool for lidar simulation, among other things, with the millions of calculations that must be done in parallel.

The Unreal* platform also opens up a lot of possibilities, especially for material descriptions. With the old rendering engine, we had an artistic description of colors. Now, with UXD, we bring a much more physical description and we are able to truly identify the materials in the scene.

Finally, Unreal is a PBR (Physic Based Rendering) engine. The immediate implication for all users is that this more physical information brings an unparalleled richness to the detection and simulation of sensors.

*To learn more about the Unreal platform, read the interview : The Interview: Everything you need to know about the use of the Unreal engine at AVSimulation